Friday, 29 December 2023

मौन की ताकत (The Power of Silence)

The Garbage Truck Story

Wednesday, 9 August 2023

You'll have to save yourself as no one is coming to save you.

Your parents aren’t coming to save you. They’ve done that often enough. Or maybe never at all. Either way, they’re not coming now. You’re all grown. Maybe not grown up, but grown. They’ve got their own stuff to take care of.

Your best friend isn’t coming to save you. He’ll always love you, but he’s knee deep in the same shit you’re in. Work. Love. Health. Staying sane. You know, the usual. You should check in with him some time. But don’t expect him to save you.

Your boss is not coming to save you. Your boss is trying to cover her ass right now. She’s afraid she might get fired. She’s fighting hard to keep everyone on the team. She’s worried about you, but she has no time to save you.

Your high school teacher won’t come and save you. You were always her favorite, and that’s why she tried to equip you as best as she could. But the moment you tossed that hat in the air, you were out of her reach.

Your network is not going to save you. What does that even mean? Isn’t a network supposed to be just friends? How good are those contacts really? Are they just that? Contacts? Would you call them at 11 PM on a Friday? No, those people surely won’t save you.

Obama isn’t coming to save you. He already did his part. He played it well, didn’t he? We can be grateful for leaders like Obama. But they won’t come and save us. They can only do so much.

Your partner won’t come and save you. The last time they tried, they broke up with their ex, and that’s why now, they’re with you. You both agreed you wouldn’t. No more knight in shining armor crap. Just two people, driving in the same lane. Wasn’t that the deal? Honor it. Don’t force your partner to save you.

The news media aren’t here to save you. In fact, they couldn’t care less whether you live or die. The news media are here to exploit you. They sneak into your inbox and feeds, hoping to steal your attention. They throw nightmare headlines at you that’ll suck away your energy. Forget the news. The news will destroy you.

The internet won’t save you. There are some nice people on there. They send helpful things to your inbox. But they’ll also ask you for money. Yeah, they want more money too, just like you. Others aren’t so nice. They’ll also ask for money, but they’re not really helping. They just pretend to be your friend. You can’t live on the internet. It’s just a tool — and tools alone can’t save you.

Your college drinking gang won’t come to save you. God knows where they are. One in jail, one happily married, one on a yacht somewhere? That sounds about right. Unless you wanna have a drink, you probably needn’t pick up the phone. It was the drinking that bound you together. Not the saving each other. That was never part of the deal.

Your audience is not coming to save you. If you have one to begin with. Maybe it’s an audience of ten. They only follow you for your puns. They’re on Twitter for themselves, not for you. If you haven’t helped them with something big, why should they save you?

Your gym trainer will not save you. He’s mostly staring at your ass while hoping his influencer game picks up enough so he can get out of this dump. “Am I big enough to sell supplements yet?” “Yeah, yeah, do another 50 crunches.” By the way, those also won’t save you.

Your financial advisor won’t save you. In fact, he’s probably losing you money. Does he cost more than he earns? Index funds? Tech stocks? Really? You could’ve figured that out on your own. And yet, here he is, collecting his $2,000 fee. I wonder who he’s really advising.

No one is coming to save you — because that’s not how life works.

“Doctors won’t make you healthy. Nutritionists won’t make you slim. Teachers won’t make you smart. Gurus won’t make you calm. Mentors won’t make you rich. Trainers won’t make you fit. Ultimately, you have to take responsibility. [You have to] save yourself.”

Every minute you spend wishing, waiting, hoping someone else will come and save you is a minute not spent saving yourself.

You’re the only one who can give you the gift of freedom.

Freedom from ignorance. Freedom from misery. Freedom from poverty, from sickness, from anxiety and judgment — even freedom from your own mind.

You have to do it. It has to be you. You have to own every single thing that happens in your life. The results you create. The curveballs life throws at you. The mess other people cause that you have to get out of. You must own it all.

You have to let go of people’s opinions. You have to let go of bad habits. You have to stop overspending, underworking, overeating, underestimating, overvaluing, or whatever else you’re doing too much of or too little for.

No one will do it for you. Not because you’re alone or because no one wants to help you or because the world is just a mean place. None of those things are true. No. No one will save you because no one else can.

You are the only one on this planet who can reach into the deepest depths of your soul and pull out every last spark of life that rests within it. You have to do it. It has to be you.

No one is coming to save you — and no one will have to if you save yourself.

Monday, 7 August 2023

How big data analytics can empower BPOs?

Brands are always on the hunt for new ways to add value to the services they bring to customers. As the consumers’ standards of good customer service continue to rise, brands must always be, or at least try to be, one step ahead of upcoming trends. Keeping up with the demands of the market means innovating constantly and creating unique strategies to stand out.

Thanks to the emergence of data analytics, brands now have a way to personalize the customer experience like never before.

How data scientists make an impact in BPO industry?

Around 1981, the term outsourcing entered our lexicons. Two decades later, we had the BPO boom in India, China, and the Philippines with every street corner magically sprouting call centers. Now in 2023, the industry is transitioning into an era of analytics, attempting to harness their sea of data towards profitability, efficiency, and improved customer experience.

The Information Ocean

The interaction between BPO agents and customers generates huge volumes of both structured and unstructured (text, audio) data. On the one hand, you have the call data that measures metrics such as the number of incoming calls, time taken to address issues, service levels, and the ratio of handled vs abandoned calls. On the other hand, you have customer data measuring satisfaction levels and sentiment.

Insights from this data can help deliver significant value for your business whether it’s around more call resolution, reduced call time & volume, agent & customer satisfaction, operational cost reduction, growth opportunities through cross-selling & upselling, or increased customer delight.

The trick is to find the balance between demand (customer calls) and supply (agents). An imbalance can often lead to revenue losses and inefficient costs and this is a dynamic that needs to be facilitated by processes and technology.

Challenges of Handling Data

When you are handling such sheer volumes of data, the challenges too can be myriad. Most of the clients wage a daily battle with managing these vast volumes, harmonizing internal and external data, and driving value through them. For those that have already embarked on their analytical journey, the primary goals are finding the relevance of what they built, driving scalability, and leveraging new-age predictive tools to drive ROI.

Delivering Business Value

The business value delivered from advanced Analytics in the BPO industry is unquestionable, exhaustive and primarily influences these key aspects:

1. Call Management

Planning agent resources based on demand (peak and off-peak) and skillsets accounting for how long they take to resolve issues can impact business costs. AI can help automate the process to help optimize costs. An automated and real-time scheduling and resource optimization tool that has led one of our BPO clients to a cost reduction of 15%.

2. Customer Experience

Call center analytics give agents access to critical data and insights to work faster and smarter, improve customer relationships and drive growth. Analytics can help understand the past behavior of a customer/similar customers and recommend products or services that will be most relevant, instead of generic offers. It can also predict which customers are likely to need proactive management. A real-time cross-selling analytics has led to a 20% increase in revenue.

3. Issue Resolution

First-call resolution refers to the percentage of cases that are resolved during the first call between the customer and the call center. Analytics can help automate the categorization process of contact center data by building a predictive model. This can help with a better customer servicing model achieved by appropriately capturing the nuances of customer chats with contact centers. This metric is extremely important as it helps in reducing the customer churn rate.

4. Agent Performance

Analytics on call-center agents can assist in segmenting those who had a low-resolution rate or were spending too much time on minor issues, compared with top-performing agents. This helps the call center resolve gaps or systemic issues, identify agents with leadership potential, and create a developmental plan to reduce attrition and increase productivity.

5. Call Routing

Analytics-based call routing is based on the premise that records of a customer’s call history or demographic profile can provide insight into which call center agent(s) has the right personality, conversational style, or combination of other soft skills to best meet their needs.

6. Speech Analytics

Detecting trends in customer interactions and analyzing audio patterns to read emotions and stress in a speaker’s voice can help reduce customer churn, boost contact center productivity, improve agent performance and reduce costs by 25%. AI tools have clients in predicting member dissatisfaction to achieve a 10% reduction in first complaints and 20% reduction in repeat complaints.

7. Chatbots and Automation

Thanks to the wonders of automation, we can now enhance the user experience to provide personalized attention to customers available 24/7/365. Reduced average call duration and wage costs improve profitability. Self-service channels such as the help center, FAQ page, and customer portals empower customers to resolve simple issues on their own while deflecting more cases for the company.

Sunday, 2 July 2023

बुआ की राखी

Thursday, 1 June 2023

तेरा मेरा रिश्ता

Sunday, 14 May 2023

Understanding the Lifecycle of Data Science

Data science is quickly evolving to be one of the hottest fields in the technology industry. With rapid advancements in computational performance that now allow for the analysis of massive datasets, we can uncover patterns and insights about user behavior and world trends to an unprecedented extent.

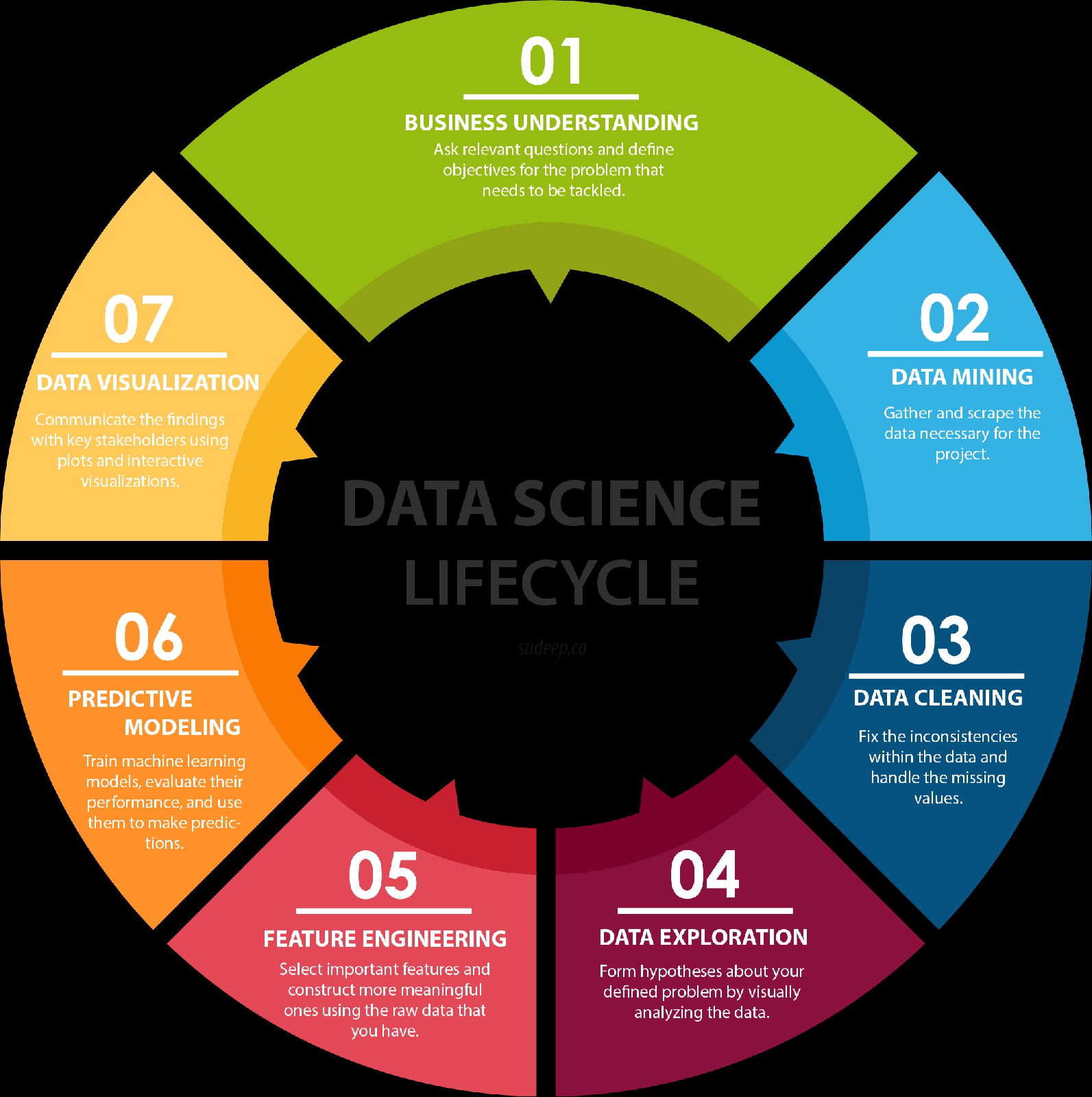

With the influx of buzzwords in the field of data science and relevant fields, a common question I’ve heard from friends is “Data science sounds pretty cool - how do I get started?” And so what started out as an attempt to explain it to a friend who wanted to get started with Kaggle projects has culminated in this post. I’ll give a brief overview of the seven steps that make up a data science lifecycle - business understanding, data mining, data cleaning, data exploration, feature engineering, predictive modeling, and data visualization. For each step, I will also provide some resources that I’ve found to be useful in my experience.

As a disclaimer, there are countless interpretations to the lifecycle (and to what data science even is), and this is the understanding that I have built up through my reading and experience so far. Data science is a quickly evolving field, and its terminology is rapidly evolving with it. If there’s something that you strongly disagree with, I’d love to hear about it!

1. Business Understanding

The data scientists in the room are the people who keep asking the why’s. They’re the people who want to ensure that every decision made in the company is supported by concrete data, and that it is guaranteed (with a high probability) to achieve results. Before you can even start on a data science project, it is critical that you understand the problem you are trying to solve.

According to Microsoft Azure’s blog, we typically use data science to answer five types of questions:

- How much or how many? (regression)

- Which category? (classification)

- Which group? (clustering)

- Is this weird? (anomaly detection)

- Which option should be taken? (recommendation)

In this stage, you should also be identifying the central objectives of your project by identifying the variables that need to be predicted. If it’s a regression, it could be something like a sales forecast. If it’s a clustering, it could be a customer profile. Understanding the power of data and how you can utilize it to derive results for your business by asking the right questions is more of an art than a science, and doing this well comes with a lot of experience. One shortcut to gaining this experience is to read what other people have to say about the topic, which is why I’m going to suggest a bunch of books to get started.

2. Data Mining

Now that you’ve defined the objectives of your project, it’s time to start gathering the data. Data mining is the process of gathering your data from different sources. Some people tend to group data retrieval and cleaning together, but each of these processes is such a substantial step that I’ve decided to break them apart. At this stage, some of the questions worth considering are - what data do I need for my project? Where does it live? How can I obtain it? What is the most efficient way to store and access all of it?

If all the data necessary for the project is packaged and handed to you, you’ve won the lottery. More often than not, finding the right data takes both time and effort. If the data lives in databases, your job is relatively simple - you can query the relevant data using SQL queries, or manipulate it using a dataframe tool like Pandas. However, if your data doesn’t actually exist in a dataset, you’ll need to scrape it. Beautiful Soup is a popular library used to scrape web pages for data. If you’re working with a mobile app and want to track user engagement and interactions, there are countless tools that can be integrated within the app so that you can start getting valuable data from customers. Google Analytics, for example, allows you to define custom events within the app which can help you understand how your users behave and collect the corresponding data.

3. Data Cleaning

Now that you’ve got all of your data, we move on to the most time-consuming step of all - cleaning and preparing the data. This is especially true in big data projects, which often involve terabytes of data to work with. According to interviews with data scientists, this process (also referred to as ‘data janitor work’) can often take 50 to 80 percent of their time. So what exactly does it entail, and why does it take so long?

The reason why this is such a time consuming process is simply because there are so many possible scenarios that could necessitate cleaning. For instance, the data could also have inconsistencies within the same column, meaning that some rows could be labelled 0 or 1, and others could be labelled no or yes. The data types could also be inconsistent - some of the 0s might integers, whereas some of them could be strings. If we’re dealing with a categorical data type with multiple categories, some of the categories could be misspelled or have different cases, such as having categories for both male and Male. This is just a subset of examples where you can see inconsistencies, and it’s important to catch and fix them in this stage.

One of the steps that is often forgotten in this stage, causing a lot of problems later on, is the presence of missing data. Missing data can throw a lot of errors in the model creation and training. One option is to either ignore the instances which have any missing values. Depending on your dataset, this could be unrealistic if you have a lot of missing data. Another common approach is to use something called average imputation, which replaces missing values with the average of all the other instances. This is not always recommended because it can reduce the variability of your data, but in some cases it makes sense.

4. Data Exploration

Now that you’ve got a sparkling clean set of data, you’re ready to finally get started in your analysis. The data exploration stage is like the brainstorming of data analysis. This is where you understand the patterns and bias in your data. It could involve pulling up and analyzing a random subset of the data using Pandas, plotting a histogram or distribution curve to see the general trend, or even creating an interactive visualization that lets you dive down into each data point and explore the story behind the outliers.

Using all of this information, you start to form hypotheses about your data and the problem you are tackling. If you were predicting student scores for example, you could try visualizing the relationship between scores and sleep. If you were predicting real estate prices, you could perhaps plot the prices as a heat map on a spatial plot to see if you can catch any trends.

5. Feature Engineering

In machine learning, a feature is a measurable property or attribute of a phenomenon being observed. If we were predicting the scores of a student, a possible feature is the amount of sleep they get. In more complex prediction tasks such as character recognition, features could be histograms counting the number of black pixels.

According to Andrew Ng, one of the top experts in the fields of machine learning and deep learning, “Coming up with features is difficult, time-consuming, requires expert knowledge. ‘Applied machine learning’ is basically feature engineering.” Feature engineering is the process of using domain knowledge to transform your raw data into informative features that represent the business problem you are trying to solve. This stage will directly influence the accuracy of the predictive model you construct in the next stage.

We typically perform two types of tasks in feature engineering - feature selection and construction.

Feature selection is the process of cutting down the features that add more noise than information. This is typically done to avoid the curse of dimensionality, which refers to the increased complexity that arises from high-dimensional spaces (i.e. way too many features). I won’t go too much into detail here because this topic can be pretty heavy, but we typically use filter methods (apply statistical measure to assign scoring to each feature), wrapper methods (frame the selection of features as a search problem and use a heuristic to perform the search) or embedded methods (use machine learning to figure out which features contribute best to the accuracy).

Feature construction involves creating new features from the ones that you already have (and possibly ditching the old ones). An example of when you might want to do this is when you have a continuous variable, but your domain knowledge informs you that you only really need an indicator variable based on a known threshold. For example, if you have a feature for age, but your model only cares about if a person is an adult or minor, you could threshold it at 18, and assign different categories to instances above and below that threshold. You could also merge multiple features to make them more informative by taking their sum, difference or product. For example, if you were predicting student scores and had features for the number of hours of sleep on each night, you might want to create a feature that denoted the average sleep that the student had instead.

6. Predictive Modeling

Predictive modeling is where the machine learning finally comes into your data science project. I use the term predictive modeling because I think a good project is not one that just trains a model and obsesses over the accuracy, but also uses comprehensive statistical methods and tests to ensure that the outcomes from the model actually make sense and are significant. Based on the questions you asked in the business understanding stage, this is where you decide which model to pick for your problem. This is never an easy decision, and there is no single right answer. The model (or models, and you should always be testing several) that you end up training will be dependent on the size, type and quality of your data, how much time and computational resources you are willing to invest, and the type of output you intend to derive. There are a couple of different cheat sheets available online which have a flowchart that helps you decide the right algorithm based on the type of classification or regression problem you are trying to solve. The two that I really like are the Microsoft Azure Cheat Sheet and SAS Cheat Sheet.

Once you’ve trained your model, it is critical that you evaluate its success. A process called k-fold cross validation is commonly used to measure the accuracy of a model. It involves separating the dataset into k equally sized groups of instances, training on all the groups except one, and repeating the process with different groups left out. This allows the model to be trained on all the data instead of using a typical train-test split.

For classification models, we often test accuracy using PCC (percent correct classification), along with a confusion matrix which breaks down the errors into false positives and false negatives. Plots such as as ROC curves, which is the true positive rate plotted against the false positive rate, are also used to benchmark the success of a model. For a regression model, the common metrics include the coefficient of determination (which gives information about the goodness of fit of a model), mean squared error (MSE), and average absolute error.

7. Data Visualization

Data visualization is a tricky field, mostly because it seems simple but it could possibly be one of the hardest things to do well. That’s because data viz combines the fields of communication, psychology, statistics, and art, with an ultimate goal of communicating the data in a simple yet effective and visually pleasing way. Once you’ve derived the intended insights from your model, you have to represent them in way that the different key stakeholders in the project can understand.

Again, this is a topic that could be a blog post on its own, so instead of diving deeper into the field of data visualization, I will give a couple of starting points. I personally love working through the analysis and visualization pipeline on an interactive Python notebook like Jupyter, in which I can have my code and visualizations side by side, allowing for rapid iteration with libraries like Seaborn and Bokeh. Tools like Tableau and Plotly make it really easy to drag-and-drop your data into a visualization and manipulate it to get more complex visualizations. If you’re building an interactive visualization for the web, there is no better starting point than D3.js.

8. Business Understanding

Phew. Now that you’ve gone through the entire lifecycle, it’s time to go back to the drawing board. Remember, this is a cycle, and so it’s an iterative process. This is where you evaluate how the success of your model relates to your original business understanding. Does it tackle the problems identified? Does the analysis yield any tangible solutions? If you encountered any new insights during the first iteration of the lifecycle (and I assure you that you will), you can now infuse that knowledge into the next iteration to generate even more powerful insights, and unleash the power of data to derive phenomenal results for your business or project.

If you think this helped your understanding of what a data science lifecycle is, feel free to share it with someone you think might benefit from reading it. I think that data science is a really exciting field that has an immense potential to revolutionize the way we make decisions and uncover insights that were previously hidden, and I strongly believe that speaking the language of data is a necessary skill in today’s workplace regardless of what field you’re in. If you have any thoughts on this, or have any links or resources that I can add to this post, contact me on krantigaurav@gmail.com